Execute Command In Datastage

Now we will see some UNIX find command example combined with xargs command, xargs can be used to do whatever witch each file found by find command for example we can delete that file, list content of that file or can apply any comment on that file. Using the Stored Proc stage in Datastage, you can call a SQL Server stored procedure When you call the SQL stored procedure from datastage, we have noticed that the correct return code is not being sent back to datastage from SQL server. That means even if there is the issue with stored procedure, datastage will.

I am trying to compile the datastage jobs using the Execute Command stage in datastage 11 or any Routines if possible. My datastage is on Unix machine.

I tried the following links to go, but I don't know how to do it.https://www-01.ibm.com/support/docview.wss?uid=swg21595194

So, How can I Compile a datastage job in UNIX from command line or any Routines.

Please help me in doing so.

Thank you.

2 Answers

It is not possible to compile DataStage jobs in Unix OS.

DataStage jobs can only be compiled from the client machine (which is on Windows). You can do this thru DatasTage Designer Client or by using commandline thru 'dscc' command.

Link which you have shared for dsjob command is for running/resetting jobs.

Why you are trying to compile a job ? In case of an abort you are trying to compile here you can reset it,

You can reset your job through UNIX server using below command :${DSHome}/dsjob -run -mode RESET -wait -jobstatus ${ProjectName} ${JobName}

May 22, 2017 Kongregate free online game Dig Dug - Dig and Dig. Play Dig Dug. Dig Dug Dig your way through the dirt as you try to get rid of the monsters in each stage. Kill the monsters by inflating them until they explode or dropping rocks on top of them. Dig a little deeper into this thrilling action game as Dig Dug! Take up the role of Dig Dug, the champion of love and justice, as you drill through the ground and defeat any enemies that stray in your path. Clear the stage by avoiding and defeating the persistent Pooka and the deep-dwelling but whimsical Fygars. Dig dug free. DIG DUG Facts. DIG DUG's actual name is Taizo Hori, which is a pun on the Japanese phrase Horitai Zo, meaning, 'I want to dig!' I bet you didn't know that. DIG DUG's son, Susumu Hori is also a famous video game star known as 'Mr. The world record high score in classic DIG DUG is 4,595,010 points. I bet you can't beat that.

Hope it will be helpful!

Not the answer you're looking for? Browse other questions tagged datastageinformation-server or ask your own question.

There have always been requirements in which you would need to run certain unix commands or shell scripts within Datastage. Although not a popular demand, its still something that can lie in the ‘good to know’ category. There are a number of different ways you can actually do this. I will try and explain the methods I have tried out.

Specifying the shell script in job properties

If you have any pre or post processing requirements that have to be run along with the job and if you have done this via a shell script, then you can specify the script in the After/Before job abort routine section , with its parameters. You should first select the ‘execsh ‘option from the drop down list to indicate that you are going to run the script.

Using the External filter stage

The external filter stage allows us to run UNIX filters during the execution of your job. Filters are programs that you would have normally used during your UNIX career. Filter programs are programs that read data from the input stream and modify it and send it on to the output stream. A filter that everyone is bound to have used is ‘grep’. Other common filters are cut, cat, grep, head, sort, uniq, perl, sh, wc and tail. The external filter stage allows us to run these commands during processing the data in the job. For eg. You can use the grep command to filter the incoming data. Shown below is a simple design.

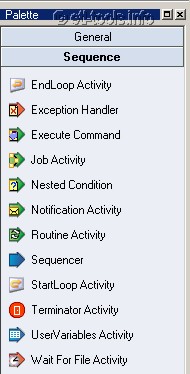

Execute Command 1.13

As per the command we are filtering out data having the number 18 in it, using the grep command.

Using the sequential file stage

Although not a frequently used option, the sequential file stage does allow us to run unix filter commands inside it. In this example I have written a shell script that can be called inside the stage. The place where you have to enter the command is shown below.

The shell script written was a straight forward one that would read from the input directly

Execute Command Powershell

#————Shell script————————-#

#!/bin/bash

while read data; do

echo “$data, Modified by emerson” >> E:/Sample/sample.txt

#– You can add your processing logic here—–#

done

#———–End of script ————————-#

The output would be as below.